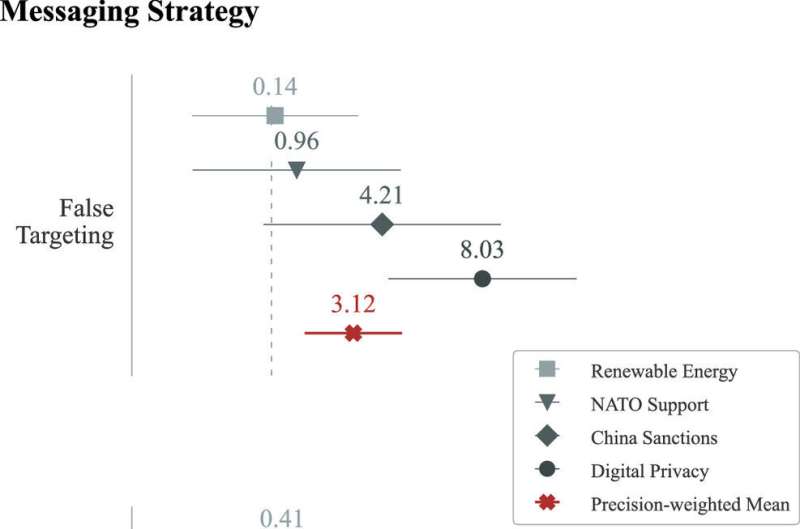

Political microtargeting does not increase GPT-4’s persuasive impact relative to non-targeted messages. Credit: Proceedings of the National Academy of Sciences (2024). DOI: 10.1073/pnas.2403116121

Recent advances in large language models (LLMs) promise to enable scalable, automated, and granular political microtargeting on an unprecedented scale. When integrated with existing personal data databases, for example, LLMs can customize messages to appeal to specific individuals’ weaknesses and values. For example, a 28-year-old non-religious liberal woman with a college degree will receive a completely different message than a 61-year-old religious conservative man who just graduated from high school.

Their new paper, “Assessing the Persuasive Power of Political Microtargeting with Large-Scale Language Models,” PNASOII postdoctoral researcher Kobi Hackenburg and Professor Helen Margetts are addressing this possibility, investigating the extent to which access to this individual-level data could increase the persuasive impact of OpenAI’s GPT-4 model.

By building a custom web application that could integrate demographic and political data into GPT-4 prompts in real time, OII researchers generated thousands of unique messages tailored to persuade individual users.

We deployed this application in a pre-registered randomized controlled trial and found that messages generated by GPT-4 were broadly persuasive, although importantly, overall, the persuasive impact of microtargeted messages was not statistically different from that of non-microtargeted messages.

This suggests that, contrary to widely held assumptions, the influence of current law masters programs lies not in their ability to tailor their messages to individuals, but rather in the persuasiveness of their general, untargeted messages.

Kobi Hackenberg said, “There are actually two plausible explanations for this result: either text-based microtargeting is not a very effective messaging strategy in itself, or GPT-4 simply cannot microtarget effectively when deployed in a manner similar to our experimental design.”

“For example, we already know that even today’s state-of-the-art LLMs cannot always reliably reflect the opinion distribution of fine-grained demographic groups, a feature that seems necessary for precise microtargeting.”

Professor Helen Margetts added: “The findings are important because leaders of major technology companies have made sweeping claims about the persuasive power of micro-targeting via LLMs like GPT. This study goes some way to refuting those claims. In contrast, it highlights the persuasive power of GPT’s ‘best messages’ on specific policy issues, without targeting.”

For more information:

Kobi Hackenburg et al. “Assessing the Persuasive Power of Political Microtargeting with Large-Scale Language Models” Proceedings of the National Academy of Sciences (2024). DOI: 10.1073/pnas.2403116121

Courtesy of University of Oxford

Quote: New Study Evaluates Effectiveness of Large-Scale Language Models for Political Microtargeting (June 26, 2024) Retrieved June 26, 2024 from https://techxplore.com/news/2024-06-effectiveness-large-language-political-microtargeting.html

This document is subject to copyright. It may not be reproduced without written permission, except for fair dealing for the purposes of personal study or research. The content is provided for informational purposes only.